-

- Downloads

Revisions

Showing

- doc/index.rst 1 addition, 1 deletiondoc/index.rst

- doc/tutorial/auto_examples/auto_examples_jupyter.zip 0 additions, 0 deletionsdoc/tutorial/auto_examples/auto_examples_jupyter.zip

- doc/tutorial/auto_examples/auto_examples_python.zip 0 additions, 0 deletionsdoc/tutorial/auto_examples/auto_examples_python.zip

- doc/tutorial/auto_examples/combo/sg_execution_times.rst 4 additions, 4 deletionsdoc/tutorial/auto_examples/combo/sg_execution_times.rst

- doc/tutorial/auto_examples/index.rst 19 additions, 19 deletionsdoc/tutorial/auto_examples/index.rst

- doc/tutorial/auto_examples/mumbo/sg_execution_times.rst 2 additions, 2 deletionsdoc/tutorial/auto_examples/mumbo/sg_execution_times.rst

- doc/tutorial/auto_examples/mvml/sg_execution_times.rst 2 additions, 2 deletionsdoc/tutorial/auto_examples/mvml/sg_execution_times.rst

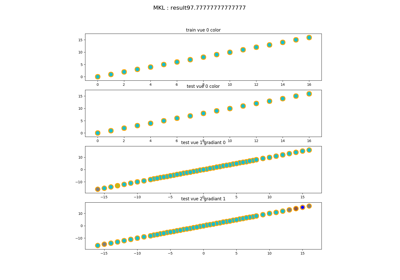

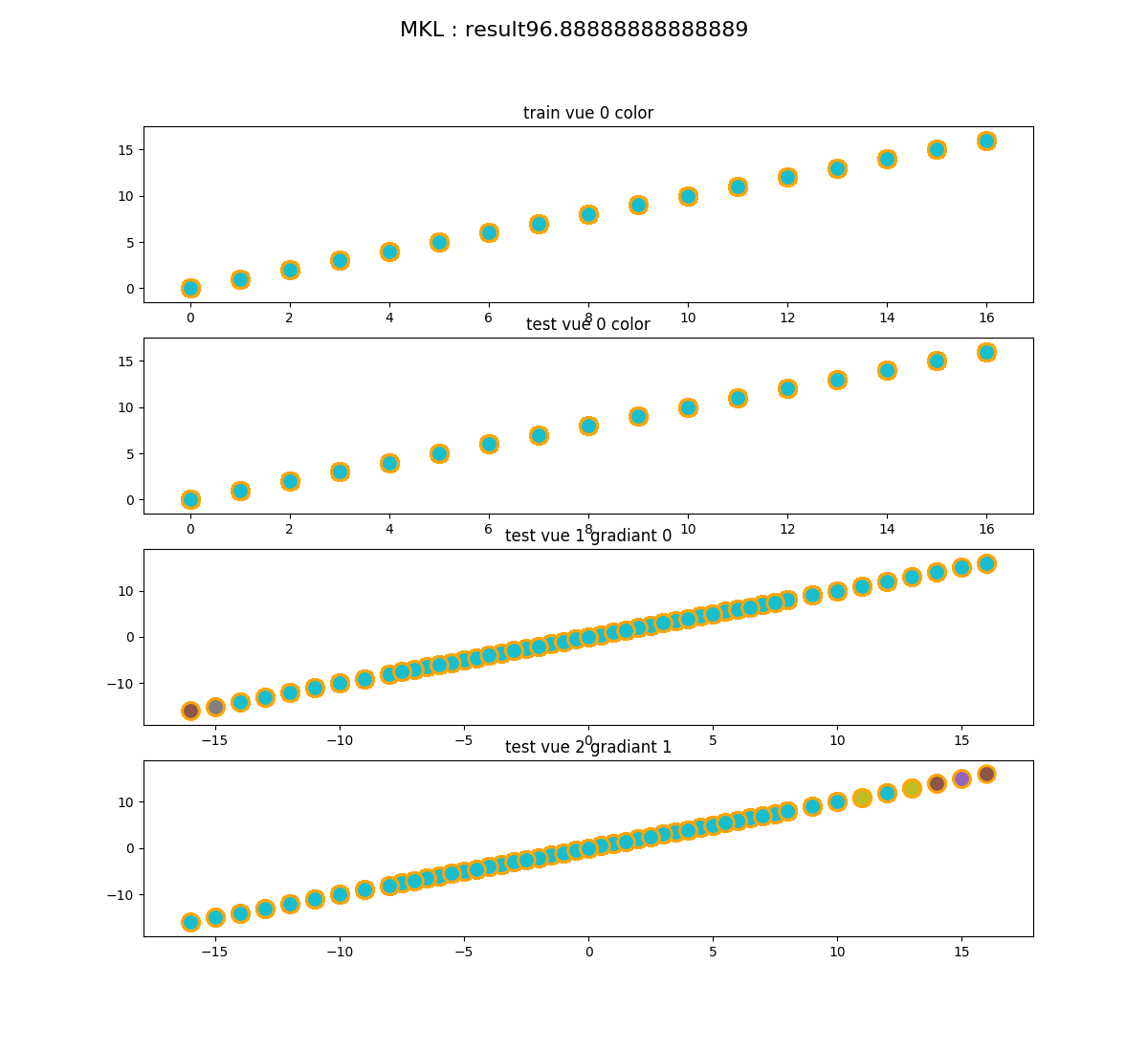

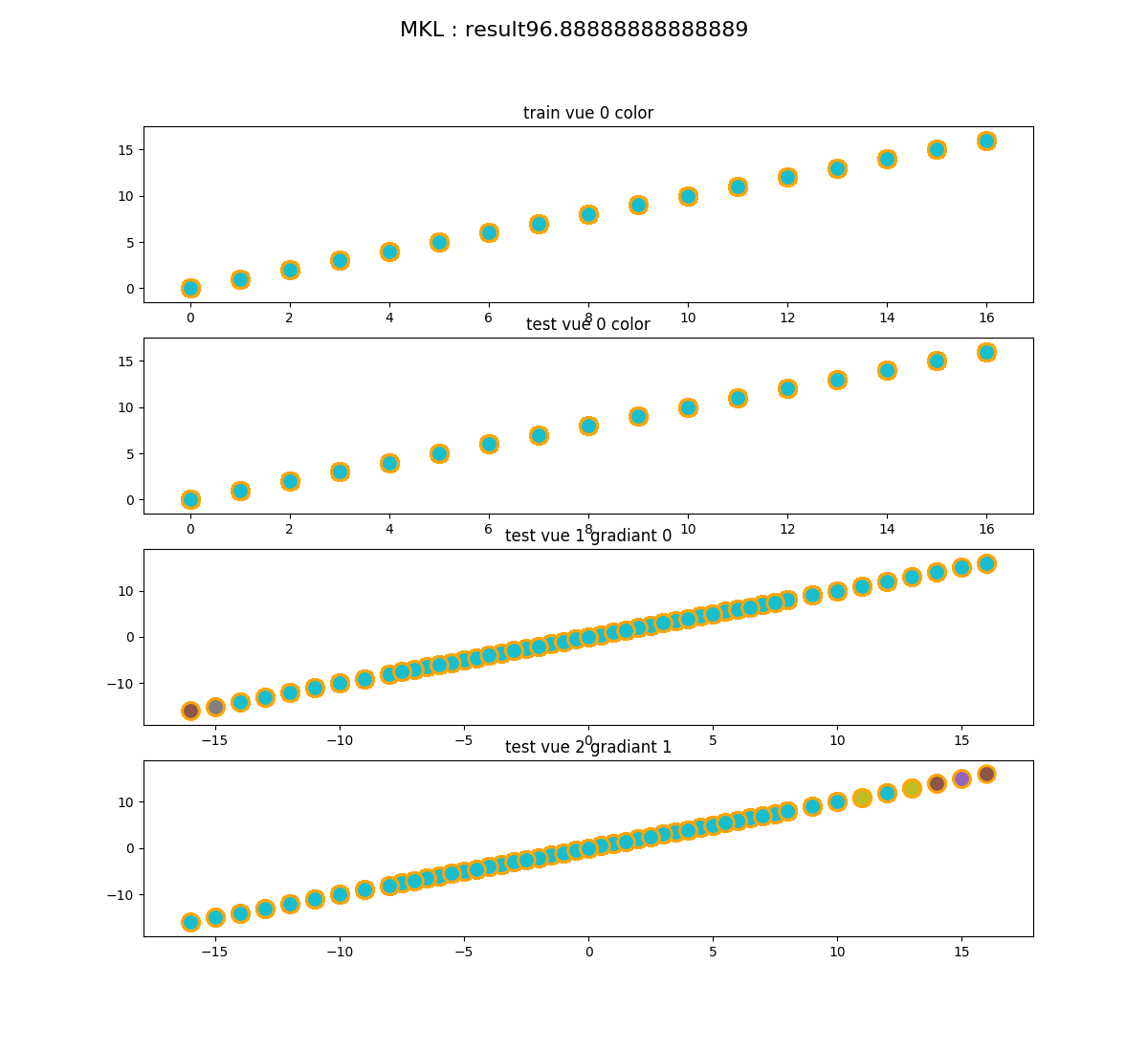

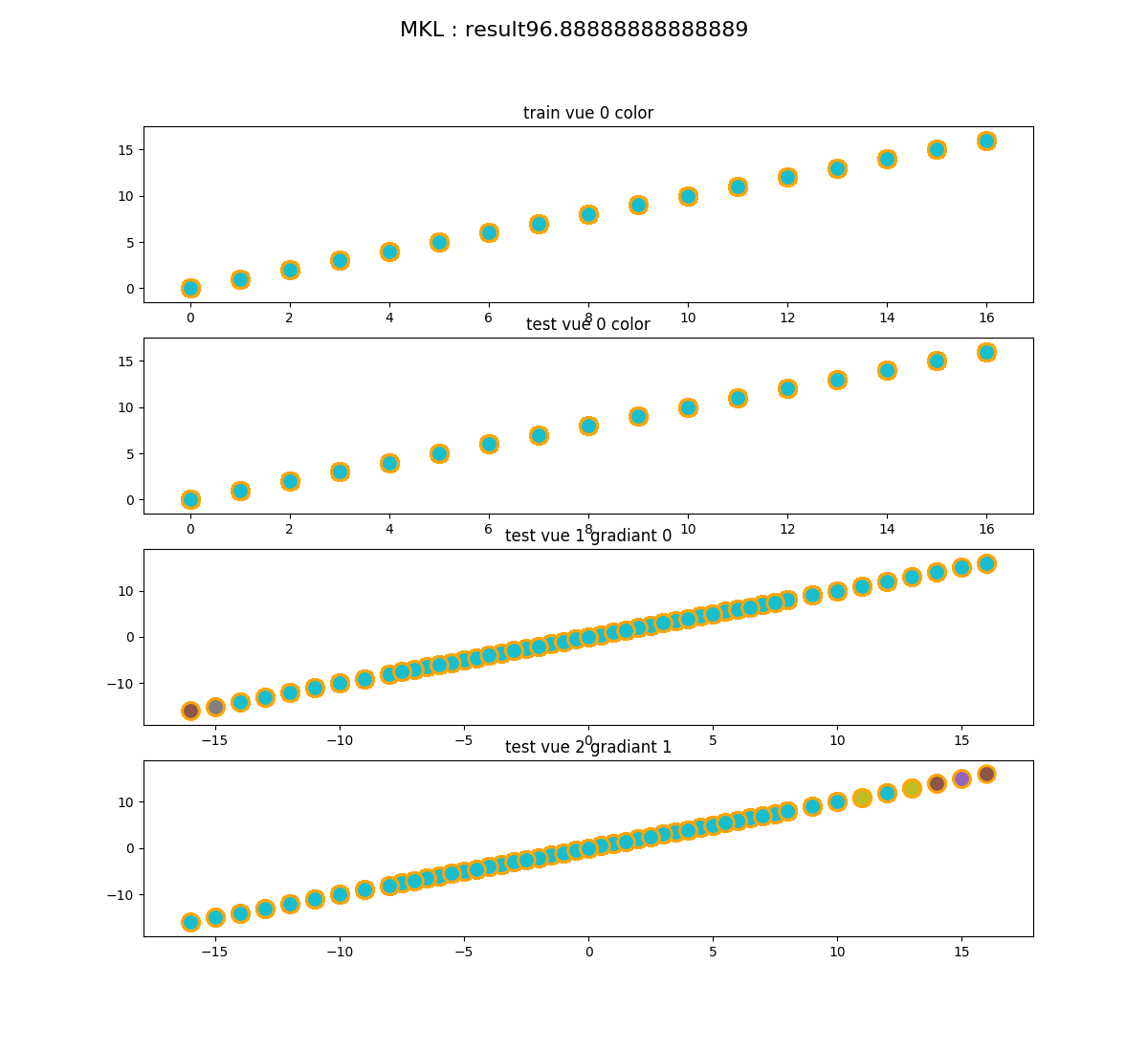

- doc/tutorial/auto_examples/usecase/images/sphx_glr_plot_usecase_exampleMKL_001.png 0 additions, 0 deletions...s/usecase/images/sphx_glr_plot_usecase_exampleMKL_001.png

- doc/tutorial/auto_examples/usecase/images/thumb/sphx_glr_plot_usecase_exampleMKL_thumb.png 0 additions, 0 deletions...e/images/thumb/sphx_glr_plot_usecase_exampleMKL_thumb.png

- doc/tutorial/auto_examples/usecase/plot_usecase_exampleMKL.ipynb 1 addition, 1 deletion...orial/auto_examples/usecase/plot_usecase_exampleMKL.ipynb

- doc/tutorial/auto_examples/usecase/plot_usecase_exampleMKL.py 0 additions, 2 deletions...tutorial/auto_examples/usecase/plot_usecase_exampleMKL.py

- doc/tutorial/auto_examples/usecase/plot_usecase_exampleMKL.py.md5 1 addition, 1 deletion...rial/auto_examples/usecase/plot_usecase_exampleMKL.py.md5

- doc/tutorial/auto_examples/usecase/plot_usecase_exampleMKL.rst 12 additions, 12 deletions...utorial/auto_examples/usecase/plot_usecase_exampleMKL.rst

- doc/tutorial/auto_examples/usecase/plot_usecase_exampleMKL_codeobj.pickle 0 additions, 0 deletions...o_examples/usecase/plot_usecase_exampleMKL_codeobj.pickle

- doc/tutorial/auto_examples/usecase/sg_execution_times.rst 12 additions, 12 deletionsdoc/tutorial/auto_examples/usecase/sg_execution_times.rst

- doc/tutorial/auto_examples/usecase/usecase_function.rst 9 additions, 7 deletionsdoc/tutorial/auto_examples/usecase/usecase_function.rst

- doc/tutorial/auto_examples/usecase/usecase_function_codeobj.pickle 0 additions, 0 deletions...ial/auto_examples/usecase/usecase_function_codeobj.pickle

- doc/tutorial/estimator_template.rst 129 additions, 0 deletionsdoc/tutorial/estimator_template.rst

- doc/tutorial/install_devel.rst 2 additions, 1 deletiondoc/tutorial/install_devel.rst

- doc/tutorial/times.rst 1 addition, 1 deletiondoc/tutorial/times.rst

No preview for this file type

No preview for this file type

| W: | H:

| W: | H:

| W: | H:

| W: | H:

No preview for this file type

No preview for this file type

doc/tutorial/estimator_template.rst

0 → 100644